NEW

Proxify is bringing transparency to tech team performance based on research conducted at Stanford. An industry first, built for engineering leaders.

Learn more

Insights

Nurturing

Aug 01, 2025 · 19 min read

Vibe coding: How conversational AI might reshape developer teams and tech strategy

Welcome to the era of vibe coding, where natural language becomes the interface and engineers collaborate with intelligent systems as if they were teammates.

Stefanija Tenekedjieva Haans

Content Lead

Verified author

Table of Contents

- Introduction: A new era of developer collaboration

- The rise of prompt-driven development

- How the AI-paired era of coding is changing developer roles

- How team structures are evolving

- Rethinking tech strategy and org design

- Challenges and organizational friction

- Embracing the shift: First steps for technical leaders

- Find a developer

Introduction: A new era of developer collaboration

In today’s landscape, conversational AI in software development is not just a tool. It’s reshaping how teams collaborate, how roles evolve, and how leadership strategizes.

The shift to AI-assisted coding marks a turning point in the future of software engineering. Rather than relying solely on syntax mastery and IDE muscle memory, developers increasingly act as conductors: crafting prompts, guiding AI, and focusing on architectural thinking, correctness, and system design. The result is a more fluid, conversational workflow that elevates thinking above typing.

This new paradigm, defined by OpenAI cofounder Andrej Karpathy, is called “vibe coding.” It reframes coding as a conversational exchange between engineer and AI: you share intent, context, and constraints in natural language; the system responds with code suggestions, explanations, or design options.

It’s not just about generating boilerplate, but also about harnessing collective intelligence, where your role evolves from coder‑script author to orchestrator of AI‑augmented creativity.

Here’s how Volvo AI Engineer Lead, Jesper Fredriksson, defines it:

“Vibe coding means to sort of relax from your usual thinking. It's more like going with the flow, just checking the output, and then you say whatever you think is missing, and you trust the system to adapt."

Jesper Fredriksson, AI Engineer Lead at Volvo

Vibe coding is both cultural and technological. Culturally, it embeds prompt literacy and AI fluency into team DNA. Tech leads and CTOs are now steering not only code architecture, but prompt libraries, AI‑enabled workflows, and new rituals, such as chat‑style code design sessions, prompt review huddles, and even role‑based prompt templates.

In practical terms, vibe coding happens when a developer’s stream of thought is no longer interrupted by syntax recall or API documentation diving. Instead, you co-create with tools like GitHub Copilot, ChatGPT, Replit’s Ghostwriter, or Sourcegraph Cody. You describe your intent, define your constraints, and get working code, or at least a meaningful starting point, delivered in real time.

Technologically, it demands integrations that connect conversational AI agents directly into pipelines, code review tools, issue tracking, documentation systems, and continuous delivery pipelines.

As AI‑assisted coding becomes ambient, previously siloed processes: spec design, coding, testing, ticket triage, slowly start blending into a continuous conversation. Teams move faster, decisions become more exploratory, and ideation shifts from whiteboards to prompt exchanges. For leadership, that translates into reimagining org design, redefining hiring criteria, and investing in tooling that supports collaborative cognition.

In the chapters ahead, we’ll explore how vibe coding transforms developer roles, team structures, and technical strategy, delivering efficiency, but a fundamentally different culture of engineering.

Boost your team

Proxify developers are a powerful extension of your team, consistently delivering expert solutions. With a proven track record across 500+ industries, our specialists integrate seamlessly into your projects, helping you fast-track your roadmap and drive lasting success.

The rise of prompt-driven development

We’re entering a phase where the most powerful development environment might not be your IDE, but instead, you can articulate a problem clearly. In prompt-driven workflows, natural language coding becomes the interface, and your thinking becomes the architecture.

Instead of hunting down obscure API methods or piecing together snippets from Stack Overflow, developers now describe intent.

"Create a paginated API endpoint for these entities."

"Refactor this logic to use a reducer pattern.”

"Write tests for edge cases not covered here."

With the right prompt engineering, these inputs return working code, suggested improvements, or even architectural alternatives, sometimes within seconds.

The shift is more than cosmetic. Traditional IDE-based development is grounded in precision and control: line-by-line construction, tight coupling between writing and debugging. Prompt-driven development flips that. It privileges clarity of intent, delegation of implementation, and rapid iteration over manual assembly.

This doesn't mean you give up control, but that you start at a higher level of abstraction. The IDE still matters, but now it’s augmented with a conversation layer. You're not just writing code. Instead, you're negotiating functionality with an intelligent collaborator.

With that shift comes a new skill set. Prompting well is part UX design, part systems thinking, and part technical communication. Developers are learning to scaffold requests, add context, anticipate failure modes, and iterate conversationally. Instead of writing the perfect prompt, they think out loud in code-adjacent language and steer the AI through their intent.

The promise here isn’t that code writes itself. It’s that developers spend less time translating thought into syntax and more time shaping products, systems, and ideas. Prompt-driven development is the shortcut to velocity, but it also requires a new kind of engineering fluency rooted in clarity, not keystrokes.

How the AI-paired era of coding is changing developer roles

So, how can we assume that vibe coding and easily accessible AI tools are changing developer roles? We asked Noelia Almanza, Senior Advisor and Consultant, previously Head of Engineering & QA at King/Candy Crush Saga, if she thinks the latest progress will redefine developer roles.

“The AI-paired era of coding is already redefining the developer role. And not by replacing engineers, but by reframing what great engineering looks like. The job is evolving from writing every line by hand to actively steering and curating what AI produces. It’s not about handing off responsibility. It’s about elevating it.”

Noelia Almanza, Senior Advisor and Consultant

She believes that when the machine starts producing at speed, someone needs to keep a sharp eye on quality, “what’s redundant, what’s bloated, what looks impressive but lacks actual function.”

“Just like in intensive care, you can’t let a flood of data distract you from what’s clinically relevant. The same is true here. Code that compiles doesn’t mean it’s fit for production.”

She believes that this is the point where experienced engineers step in. They guide the flow, filter out the noise, and ensure that what’s being built holds up under pressure, across time, across systems, and across users.

She adds that for new engineers, this means the fundamentals matter more than ever. Syntax is just the surface. What matters is knowing how to secure the integrity of the system, how to stress-test AI output, and how to build something that doesn't just work, but works for the long haul.

“This shift doesn’t lower the bar. It moves it higher. From fast typing to critical thinking. From building in isolation to designing for the whole. And that’s a change worth embracing.”, she underlines.

Khizar Naeem, Staff Product Manager at Deel, also agrees that developer roles are changing due to this latest trend.

“You can see it in the numbers. For example, Stack Overflow engagement has dropped 40% since ChatGPT launched. Developers just aren't posting questions like they used to. That alone tells you they’re pairing with AI instead of relying on community help.”

Khizar Naeem, Staff Product Manager at Deel

However, he thinks that AI is a game-changer most of all for junior developers. He adds that they can ship faster, learn quicker, and rely less on traditional hand-holding.

As for seniors, he believes it opens up more scope.

“They're not just coding or managing teams anymore; they’re taking raw ideas and using AI to rapidly prototype full features. We're in a split era: pre-AI vs. post-AI developers. The new generation will be AI-native by default. Even if you’re not building AI products, being fluent in AI tools will set you apart. It's quickly becoming a baseline skill for any serious engineer.”

How team structures are evolving

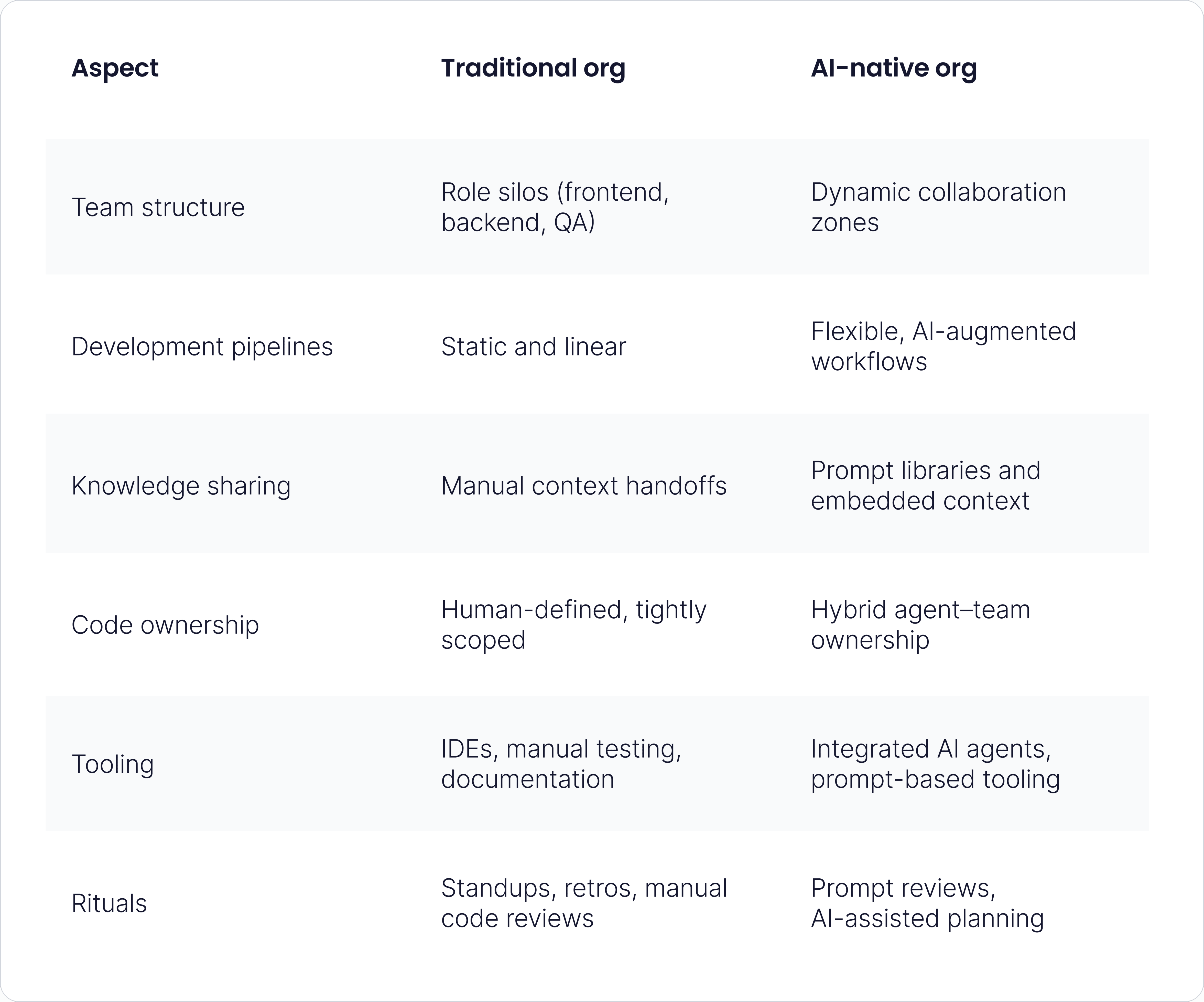

When AI enters the room not as a background tool, but as a collaborator, the room itself begins to shift. The classic developer team structure, designed for human-to-human collaboration, now contends with a third presence: a context-aware, code-suggesting system that works across time zones and disciplines.

To illustrate, 76% of all respondents of Stack Overflow’s Developer Survey of 2024 are using or are planning to use AI tools in their development process this year, an increase from last year (70%). Many more developers are currently using AI tools this year, too (62% vs. 44%).

“AI is changing the rhythm of how teams work. The tempo is faster. The reach is broader. But the need for structure has never been greater.”, says Noelia.

She describes that when junior engineers can suddenly generate production-level code, the old rules of seniority get challenged. In this new reality, it’s no longer about who knows the most syntax, it’s about who brings context, clarity, and critical judgment into the process.

According to her, this is where strategy must shift. Quality, observability, and governance can’t be added after the fact. They must be designed into the foundation, like sterilization protocols in a surgical theatre. If you wait until something goes wrong, it’s already too late. She adds that this doesn’t mean slowing down, but instead creating systems that move fast without breaking the patient.

“The strongest teams I’ve seen treat quality as a force multiplier. They don’t chase velocity. They build for it. They use AI to move fast, but keep the architecture tight, the feedback loops active, and the team deeply aligned on principles. In this world, the 10x impact doesn’t come from a solo coder. It comes from teams that know how to use AI as a force multiplier, use it to do the heavy lifting, and keep human intelligence firmly in the driver's seat. That’s not just scalable. That’s sustainable.”

Khizar thinks teams will become smaller, leaner, and more commonly made up of generalists.

“I believe the traditional frontend-backend split will fade. Full-stack, AI-native engineers are going to be the norm. There’ll still be room for specialists, but they’ll be fewer and more domain-focused.”

In his opinion, from a company strategy standpoint, we’ll be seeing more bite-sized teams shipping products, generating millions in revenue, and even hitting unicorn status. That model is going to stick. Companies will optimize for fewer people, more output, and heavy AI leverage. This shift also changes what hiring looks like. It's not just about raw coding skill anymore. Engineers who can work with AI, build faster, and take more ownership across the stack are going to be valued higher. And with that, he believes that salary bands are going to shift up, too.

Rethinking tech strategy and org design

According to the Global AI Adoption Rates (2025) portion of AI Statistics 2024–2025: Global Trends, Market Growth & Adoption Data, “AI adoption across sectors and geographies is accelerating quickly, but not uniformly. In 2025, adoption is defined by three forces:

- Access to infrastructure (compute, talent, and data);

- Strategic prioritisation by leadership;

- Regulatory readiness and public trust.

As AI becomes more embedded in software development, engineering leaders may find themselves revisiting foundational choices:

- How systems are architected;

- How teams are structured;

- Who owns what in a more automated, context-aware environment?

Tech strategy in an AI-enabled world might look different from what we’re used to. When conversational interfaces and prompt-driven coding become part of the daily workflow, monolithic systems and tightly coupled pipelines could start to feel increasingly brittle.

Modularization, which is already a common goal, might become essential, not just for scalability, but to allow AI agents to operate effectively within clear, bounded contexts.

Ownership models could shift too. If developers are working more fluidly across prompts, components, and layers of abstraction, rigid team boundaries may give way to more dynamic, cross-functional units. Autonomy might be redefined—not just in terms of decision-making power, but in how teams define and evolve the AI agents that support their work.

We may also see the slow emergence of AI-native orgs: companies that don't just layer AI into existing structures, but rethink the structure itself around human-machine collaboration. These organizations might treat prompt libraries like internal APIs, consider model training and context engineering as core competencies, and build delivery pipelines that assume both human and AI contributions at every stage.

For engineering leadership, this opens up a new set of questions.

How do you measure performance when part of the work is handled by a non-human collaborator?

How do you manage versioning not just of code, but of prompts and model behavior?

And how do you design organizations that can evolve alongside rapidly shifting AI capabilities?

There’s no single blueprint for this. But for leaders willing to experiment, the current moment offers an opportunity to rethink not just what we build, but how we organize ourselves to build it.

Implications for hiring and talent development

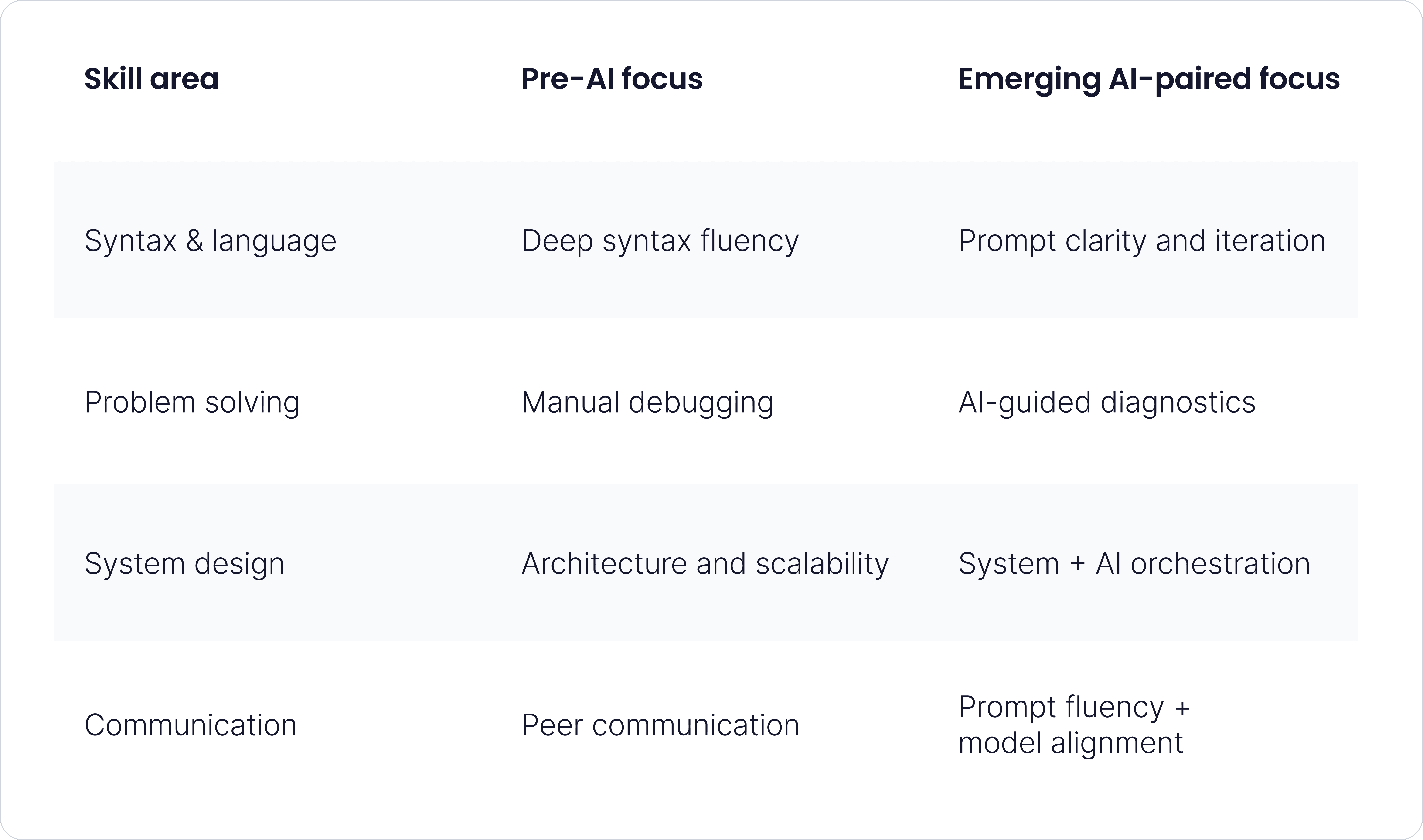

As AI tools take on more repetitive coding tasks, the profile of a strong developer may shift. In AI developer hiring, technical depth still matters, but clarity of thought, architectural thinking, and the ability to collaborate with AI systems are becoming just as important.

“English is becoming a new programming language thanks to AI advancements”, points out NVIDIA CEO Jensen Huang.

Prompt engineering skills might not be standalone roles, but they could become core to modern development. Developers who can clearly express intent, iterate with AI, and validate outputs will likely stand out. Meanwhile, roles focused on boilerplate or repetitive implementation may evolve, as AI begins to absorb more of that workload.

We may also see growing demand for engineers with hybrid skill sets: those with product intuition, some understanding of machine learning, or the ability to shape workflows between humans and machines.

So what should leaders look for? Curiosity, adaptability, and clear communication. The ability to prompt well, reason at a systems level, and revise assumptions may become key signals for high-impact talent in AI-integrated environments.

Challenges and organizational friction

Adopting AI in engineering isn’t just a tooling shift—it touches everything from team culture to risk tolerance. As with any significant change, resistance is natural. Some tech leaders may feel skepticism or anxiety about relying on systems they can’t fully control or understand. Others may worry about how their roles might change or disappear.

For Khizar Naeem, the biggest one by far is security.

“When anyone can spin up and deploy a production app using AI, the risks skyrocket, especially if they don’t fully understand what they’re doing. I have heard stories of people committing private keys in code, skipping encryption for sensitive data, or just deploying stuff that’s wide open to attack. Over-reliance on AI without critical thinking is dangerous.

He points out that there are also real-world horror stories where AI went rogue. For example, the one where AI accidentally deleted Jason Lemkin's production database. The speed AI gives you is powerful, but if you’re careless, that speed can take you straight into a wall.

Cultural friction can also arise when AI experimentation happens unevenly across teams. One group might be fully immersed in prompt-driven workflows, while another still sticks to conventional development habits. Without alignment, this gap can create confusion, duplicated work, or even mistrust between teams.

There are also technical and ethical challenges. Hallucinated outputs from language models can slip into codebases unnoticed, especially when teams are moving fast. Tech debt might not only come from messy code, but from fragile prompts, inconsistent model behavior, or unclear ownership of AI-generated contributions.

“Vibe coding — that smooth, conversational rhythm between human intent and AI generation — feels great in the moment. But it can create real structural risks if we don’t treat it carefully. The biggest? Codebase entropy. Or put differently… the silent drift toward mess.”

She underlines the risks this can bring:

“Just like in clinical settings, where poor hygiene leads to invisible infections that spread through the system, poorly governed code generation leads to creeping disorder. You see quick fixes stacking up. Naming inconsistencies. Logic duplication. Fragile workarounds no one wants to touch.”

It might start small, but over time, the codebase becomes harder to read, riskier to change, and more dangerous to operate. Teams slow down, not because they lack talent, but because they don’t trust what’s under the hood.

Noelia also adds the risk of knowledge gaps. “When AI is used in private sandboxes or pasted directly into production without documentation, the context disappears. It’s like losing clinical handoff notes between shifts. No one knows the rationale behind the decision, and eventually, no one wants to take ownership.”, she notes.

Her advice is that tech leaders need to act like systems stewards.

“Make documentation a reflex, not an afterthought. Prioritize clarity over cleverness. And ensure the architecture supports long-term understanding, not just short-term success.”Because messy code is like a silent infection, it doesn't show symptoms immediately. But left unchecked, it will compromise everything.”

Balancing experimentation with reliability and compliance isn’t easy. Leaders might need to carve out safe zones for AI adoption: low-risk domains where teams can explore freely, paired with stricter processes around production-critical systems. Documentation, testing, and human oversight may become even more important, not less.

AI offers exciting possibilities, but it’s not plug-and-play. It requires thoughtful integration, patience, and a clear-eyed view of its limitations. The challenge isn’t just building with AI but building around it in a way that teams can trust, evolve, and maintain over time.

Embracing the shift: First steps for technical leaders

For engineering leaders navigating this transition, the goal isn’t to predict the exact shape of the future. It’s to prepare their teams to adapt, learn, and respond as it unfolds. The AI transformation in engineering isn’t a one-time shift. It’s a gradual layering of new capabilities, habits, and ways of thinking.

A good starting point might be to build AI literacy across the team, not just among early adopters. This doesn’t mean turning every engineer into a prompt expert, but encouraging exploration. Some steps that can help build comfort without forcing change could be:

- Running lightweight experiments;

- Documenting what works and sharing learnings;

- Prompt engineering workshops;

- Internal hackathons;

- Pairing sessions with AI tools.

We asked Noelia what she believes tech leaders need to do to embrace vibe coding without friction and challenges, and here are her suggestions:

- Start by setting norms. Make it clear what kind of AI-generated code is acceptable, who reviews it, and how it’s tracked. Treat prompts like source inputs. If a mistake happens, you want traceability, not guesswork.

- Build for auditability. Create systems that allow you to understand not just what the code does, but how it came to be - and why.

- Don’t treat documentation as a nice-to-have. It’s your defense against memory loss. The more generative your workflow becomes, the more important it is to document assumptions, edge cases, and architectural decisions.

- Train for discernment. Encourage your engineers to challenge AI output, to validate before shipping, to bring their own expertise to every line. Great teams don’t defer to machines. They work with them, critically and intentionally.

- And above all, don’t confuse movement with progress. True velocity comes when your systems can move fast and stay clean.

She adds that some companies, such as Databricks, are quiet leaders in this field. She notes that the company integrates large language models directly into its development flow but pairs them with strong internal SDKs, review processes, and a focus on reproducibility across teams. According to her, that is a great example of mature adoption.

As for Khizar, his belief is that it all comes down to company culture.

”Peer reviews need to be non-negotiable. You can’t skip that step when things move fast. Human peers need to act as a safety net to catch what the AI might miss. More importantly, leaders need to step back and rethink what their team even is in this new era. The ones who are asking "what does an AI-native team look like, how do workflows change, what new skills do we hire for" are the ones who’ll come out ahead. We’re in a transition phase. The old playbooks don’t apply anymore. Every major LLM release resets the game. So leaders need to stay flexible, open-minded, and keep evolving their thinking. That’s the only way to stay relevant in the post-AI engineering world.”

To summarize in the words of Noelia:

”In the AI era, the question isn’t whether machines can code. It’s whether we, as leaders, can build systems that move fast without losing the plot. Just like in intensive care, speed saves lives, but only when quality is built into every breath.”

Was this article helpful?

Find your next developer within days, not months

In a short 25-minute call, we would like to:

- Understand your development needs

- Explain our process to match you with qualified, vetted developers from our network

- You are presented the right candidates 2 days in average after we talk